Why are accurate transcriptions important? Because errors cause confusion, time loss, and potentially serious consequences in sectors such as legal or medical. This article analyzes the main causes of errors in transcriptions, from audio problems to difficulties with accents and technical language, and proposes practical solutions to improve accuracy.

Key points:

-

Common problems: Background noise, overlapping voices, non-native accents, technical language, and speaker confusion.

-

Main causes: Technological limitations, poor audio quality, and lack of specialized knowledge.

-

Practical solutions:

-

Manual reviews to ensure quality.

-

Use of customized vocabularies and audio enhancement tools.

-

Better speaker identification and standardized templates.

-

-

Continuous improvement: Systems with feedback that learn from errors, reducing error rates by up to 10-30%.

Accurate transcriptions not only optimize productivity but are also key to avoiding misunderstandings and ensuring informed decisions in any work environment.

How to correct an automatic transcription | Live example | Most common ERRORS

Most Common Errors in Real-Time Transcriptions

Understanding these errors is key to implementing strategies that improve the accuracy of transcriptions.

Audio Quality Problems

Environmental noise and technical distortions account for up to 45% of errors in transcriptions. These problems include sounds such as simultaneous conversations, machinery, traffic, or even air conditioning, which can obscure the spoken words. As a result, the system misinterprets or loses complete phrases.

Simultaneous speech, where several people speak at the same time, can reduce the accuracy of transcriptions by up to 25%. This problem is common in dynamic meetings, where participants tend to interrupt or actively debate.

Another challenge is muffled voice, which occurs when participants are far from the microphone, use low-quality devices, or have poor connections in video calls. This can lead to misinterpreted words or the omission of entire segments.

Additionally, technical distortions, such as audio cuts, signal interference, or connection drops, create gaps in the transcriptions or incorrect interpretations of the affected words.

|

Problem |

Description |

Impact on Transcription |

|---|---|---|

|

Background noise |

Sounds that make it difficult to hear the words |

Omitted words |

|

Overlapping voices |

Multiple people speaking at the same time |

25% reduction in accuracy |

|

Muffled voice |

Poor audio quality or distance from the microphone |

Omitted segments |

|

Technical distortions |

Audio cuts or interferences |

Gaps and errors in interpretation |

Problems with Accents and Technical Language

Beyond audio problems, accents and technical language represent a significant challenge. Transcription systems have an error rate ranging from 16% to 28% when facing non-native accents or regional pronunciations. According to the National Institute of Standards and Technology (NIST), speakers with regional accents tend to have higher word error rates (WER), while for native speakers these rates are between 6% and 12%.

Technical language also complicates transcriptions. Specialized terms, acronyms, and jargon specific to each industry are often not included in the standard dictionaries of transcription systems.

However, multilingual models have shown advances by training with diverse data. For example, Google managed to improve the accuracy of its transcriptions by 30% by including different accents in its training. Similarly, Amazon Alexa increased recognition rates for non-native English speakers by more than 40% by expanding its datasets.

Speaker Confusion and Formatting Issues

The incorrect identification of speakers is another frequent challenge. Similar voices or abrupt changes in interventions can complicate the accurate assignment of who said what. This problem is exacerbated if participants use recording devices with different audio quality levels.

In addition, inconsistencies in formatting, such as incorrect labels for speakers or inadequate punctuation, complicate the reading and understanding of transcriptions, especially if they are extensive or complex. Incorrect segmentation, where the system does not adequately distinguish between the interventions of different speakers, can generate confusing paragraphs that mix ideas.

These errors not only affect the clarity of transcriptions but also limit their usefulness. In the next section, we will analyze the main causes of these problems and possible solutions to improve accuracy.

Why Transcription Errors Occur

To improve transcriptions, it is crucial to understand what causes errors. Factors such as technological limitations, poor audio quality, and lack of specialized knowledge play an important role. Here we analyze how these elements directly affect accuracy.

Limitations of Voice Recognition Software

Automatic speech recognition (ASR) systems face technical challenges that affect their ability to transcribe accurately. For example, advanced tools like Whisper can achieve word error rates (WER) as low as 2-3% in audiobooks recorded in ideal conditions. However, in real conversations, these rates can spike to 10-30%.

“Voice recognition struggles to be accurate in noisy environments or with varied accents and dialects. Factors like background noise, overlapping voices, or low-quality microphones affect performance. Additionally, understanding context and resolving ambiguous phrases remains a significant challenge. Homophones, which sound the same but have different meanings, require context to be interpreted correctly.” – milvus.io

Another notable problem is the disparity in accuracy depending on the speaker. ASR systems are twice as likely to make errors when transcribing audio from Black individuals compared to White individuals. Furthermore, synchronization errors, with delays of up to 500 milliseconds, can cause mismatches between audio and transcription in real-time.

Poor Recording Quality

The quality of the audio is the foundation for obtaining accurate transcriptions. Factors such as background noise, distance from the microphone, and the use of low-quality equipment can hinder voice clarity, especially in contexts like videoconferences with unstable connections. A notable example shows how a law firm managed to recover a low-quality recording using advanced noise reduction software and professional transcribers.

Additional problems, such as echoes and other audio defects, complicate the process even further, increasing the time required to obtain accurate transcriptions.

Lack of Specialized Knowledge

The accuracy of a transcription does not only depend on correctly identifying words but also on adequately interpreting technical terms and the context in which they are used. In sectors such as medical, technical, or corporate, lack of specific experience can lead to serious errors that compromise the quality of the content.

To reduce these problems, it is essential to have transcribers with experience in the area or provide them with glossaries containing relevant terminology. Identifying these limitations is the first step to implementing solutions that will be addressed later.

How to Solve Accuracy Issues in Transcriptions

Tackling accuracy issues in transcriptions requires practical strategies that can significantly improve the quality of the final result.

Manual Review and Quality Controls

Human intervention remains essential to ensure accuracy. Although artificial intelligence systems have advanced greatly, the nuances and context of language often require direct supervision to avoid errors. Quality controls ensure that transcriptions are useful for subsequent analysis.

For example, in studies on parliamentary debates in Portuguese, the error rate was reduced to 1.7% thanks to thorough review processes. In the medical field, where accuracy can be critical, quality controls managed to reduce errors by 11%.

Manual review is particularly useful for identifying errors that automatic systems do not catch. Breaking long audio files into smaller segments facilitates more detailed review and reduces reviewer fatigue, improving overall accuracy.

Customized Word Lists and Audio Enhancements

Using customized vocabularies is a key tool for improving transcriptions. These lists enable systems to recognize proper names, acronyms, brands, and specific terms that might otherwise be misinterpreted. Customized language models also help in understanding the context of technical words or those that sound similar.

“Noise harms the accuracy of AI; with clean audio, performance improves.”

– John Fishback, Video Editor

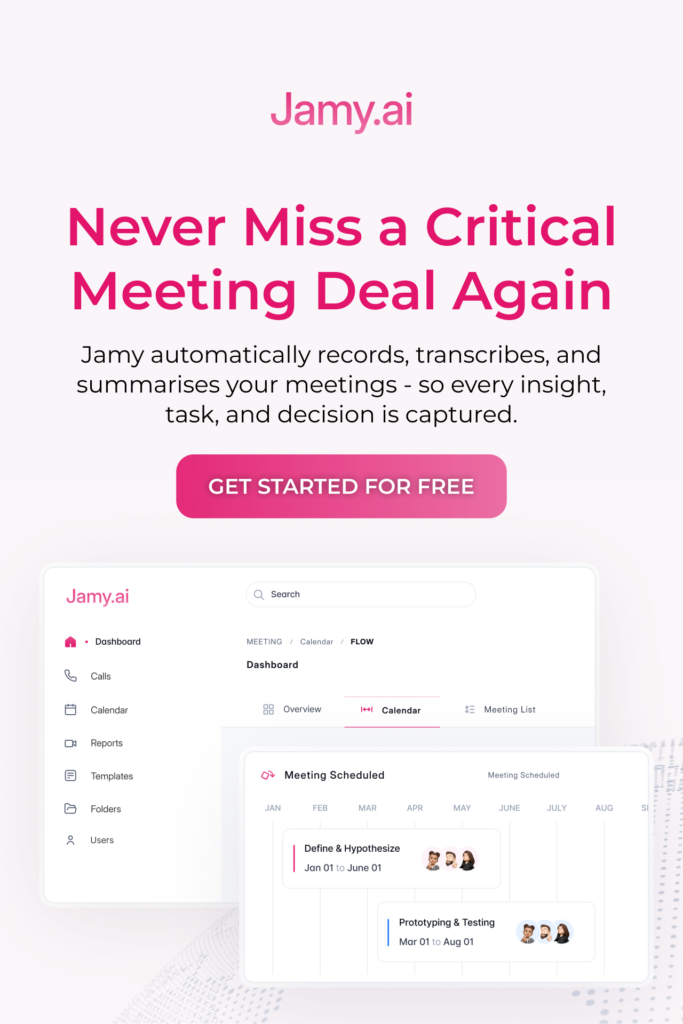

The quality of the audio is also crucial. Recording in quiet spaces, employing noise reduction, and normalizing volume can make a significant difference. Tools like Jamy.ai offer options for training customized vocabularies, adapting to the specific needs of each sector.

Better Speaker Detection and Standardized Templates

Accurate identification of speakers is vital in transcriptions with multiple participants. To improve this aspect, it is important to use well-positioned microphones that clearly capture all interlocutors and avoid overlapping voices. Additionally, consistently labeling speakers improves accuracy over time, and some advanced systems can connect to calendars to suggest contact names.

Another key aspect is standardizing the format of transcriptions. Standardized templates streamline the process, ensure consistency, and present the content professionally. These templates often include speaker labels, timestamps, and headings to better organize the information.

Defining clear requirements and collaborating with transcription professionals to create these templates is essential. It is also useful to review them periodically to ensure they fit current needs. Complementing this approach with industry-specific glossaries helps maintain uniform records, a crucial aspect in fields where accuracy is indispensable.

With these strategies, it is possible to achieve accuracy levels close to the 99% standard, which equates to about ten errors in a transcription of a thousand words. This demonstrates that, with the right tools, it is possible to meet the highest quality standards in transcriptions.

How Feedback Systems Improve Transcriptions Over Time

In addition to manual reviews, automated feedback systems are transforming automatic transcriptions into increasingly accurate tools. These systems not only correct errors but also learn from them, adjusting to the specific needs of users. This approach creates a cycle of continuous improvement that reinforces both accuracy and efficiency. Two fundamental pillars of this process are error detection and machine learning.

Error Identification and User Participation

Today, many platforms allow for real-time correction of errors. This is especially useful in live events or meetings, where immediate accuracy can make a significant difference.

A key element of this process is confidence scores. These indicate the least reliable words in a transcription, automatically highlighting them for users to review. Instead of examining the entire text, users can focus on areas that truly need attention, saving time and effort.

Additionally, some advanced systems apply automatic corrections for recurring errors. For example, if a system often confuses “meeting” with “review” in certain contexts, it may learn to automatically correct this error. Tools like Jamy.ai go a step further, offering features like speaker labeling and real-time annotations. This not only improves accuracy but also better organizes the generated content.

Machine Learning Models and Performance Analysis

Machine learning is the driving force behind these improvements. Modern systems evaluate their performance using metrics such as Word Error Rate (WER), along with others like accuracy and F1 scores. These analyses allow for identification of areas for improvement and adjustment of models accordingly.

For example, direct feedback from users can reduce WER by 6% and improve speaker identification by 8%. If the system is also trained with specialized vocabulary, improvements can reach up to an additional 10%. In specialized sectors, the impact is even greater, with reductions in error rates ranging from 20% to 30% when using technical terminology.

Models like DeepSpeech have achieved error rates as low as 4% in controlled environments. Thanks to continuous feedback, these systems can maintain similar levels of accuracy under real conditions.

Tangible Results of Continuous Feedback

These technological improvements are not just theoretical; they have clear practical applications. For example, using advanced language models can reduce WER by 10%, while noise cancellation algorithms can improve accuracy by 15%. By combining these tools with effective feedback systems, the time spent on manual corrections decreases significantly.

Another important benefit is the ability to adapt to different accents and speaking styles. Models trained with a wider variety of audio show a 15% reduction in WER for non-native speakers. This progress is accelerated when systems receive constant feedback on these specific patterns.

Feedback also helps address challenging situations, such as interruptions or overlapping conversations. Although these conditions can reduce speaker diarization accuracy by 25%, systems that learn from user corrections develop more effective strategies to handle them.

Users employing these technologies report a more satisfying and productive experience. When they see systems improving over time, frustration from repetitive errors decreases, and confidence in these tools as allies in their daily work increases.

Conclusion: Accuracy for a More Efficient Job

In today’s work environment, having accurate transcriptions is not a luxury; it is a necessity. Errors in transcriptions can generate confusion and, in critical sectors such as legal or medical, even serious consequences. In these fields, every word counts to avoid misunderstandings that could lead to serious problems. Therefore, ensuring clear and reliable communication requires effective solutions.

Main Challenges and Solutions

Errors in transcriptions are often due to factors such as poor audio quality, variety of accents, and difficulties in identifying speakers. These limitations are related to both the capabilities of automated systems and the quality of recordings and the lack of customization in algorithms.

To address these problems, it is crucial to implement strategies such as:

-

Thorough manual reviews to detect and correct faults.

-

Creation of customized vocabularies that reflect the specific context.

-

Improvements in speaker identification, especially in conversations with multiple participants.

These actions not only increase accuracy but also reduce the margin of error in sectors where every detail is crucial.

The Importance of Continuous Improvement

Given these challenges, committing to continuous improvement is essential. Systems that integrate feedback allow tools to learn from their errors and adapt to the specific needs of each user. This approach not only optimizes the accuracy of transcriptions but also increases overall efficiency.

For example, the medical transcription market, which could reach 5.11 billion euros by 2028, reflects the impact of these technologies in key sectors. Companies that adopt systems with effective feedback often experience improvements in work quality and productivity.

Investing in tools that evolve with user needs not only reduces the time spent on manual corrections but also enhances the work experience. Additionally, constantly collecting and analyzing feedback helps to identify areas for improvement and prepare systems for future challenges.

An example of this evolution is Jamy.ai, a tool that adapts to the specific needs of each organization, demonstrating how technology can become an indispensable ally for a more reliable and efficient work environment.

FAQs

What impact do inaccurate transcriptions have in critical sectors such as legal or healthcare?

In sectors like healthcare and legal, accuracy in transcriptions is not optional; it is fundamental. An error in this area can have devastating consequences.

In medicine, an incorrect transcription could lead to misdiagnoses, incorrect treatments, or even failures in medication administration. These errors not only compromise patients’ health but can also put their lives at risk. Conversely, in the legal field, an inaccurate transcription can distort the meaning of key testimonies or documents. This could influence judicial decisions, generate legal conflicts, and ultimately affect justice significantly.

For these reasons, ensuring accuracy in transcriptions is indispensable. It not only prevents misunderstandings but also protects people’s safety and ensures compliance with regulations in these sectors where details are crucial.

How can transcription accuracy be improved in noisy environments?

Achieving accurate transcriptions in noisy places can be challenging, but there are ways to improve results by combining good practices and appropriate technology. For example, using high-quality microphones that minimize background noise is a key step. It is also important to record in the quietest spaces possible, as the environment has a direct impact on audio quality.

Additionally, resorting to software with noise reduction technology can make a significant difference. These programs filter out unwanted sounds and allow voice recognition models to adjust to the context in which they are being used. Customizing these models according to the specific needs of the environment greatly enhances their performance.

Lastly, advanced tools such as AI assistants offer practical solutions. These technologies not only automate repetitive tasks but also allow for personalized adjustments, contributing to greater accuracy, saving time, and facilitating work.

How do feedback systems help improve accuracy in automatic transcriptions?

Feedback systems are essential for refining the accuracy of automatic transcriptions. Thanks to them, it is possible to detect errors in transcriptions and adjust the artificial intelligence models to correct them. This process can be carried out through human reviews or through automated algorithms designed for this purpose.

Every time a correction is made, the system learns from the errors and improves its ability to interpret speech. Over time, this results in more accurate and reliable transcriptions. This dynamic approach allows transcriptions to constantly adapt to the specific needs of users, offering a more tailored and effective service.